Methods

In this project I attempted using the spatiotemporal sensitivity properties of the human visual system for video pre-processing prior to encoding using the recent H.264/AVC compression standard [H264]. Same filters that were used for image quality metrics, could be used for prefiltering

A chain of two independent pre-processing units was built, where temporal filtering operated after the spatial one. Both units are described in more detail below.

Spatial prefiltering

Temporal prefiltering

Experiment setup

Video sequences

4 VQEG (Video Quality Expert Group) standard 8-second NTSC (525-line) reference sequences were used (available from the VQEG site). The sequence format was according to the ITU-R BT.656 specification.

The reason for selecting 4:2:2 standard definition sequences was to give more weight to the chroma channels (the ideal setting thus would be 4:4:4, however free 4:4:4 high quality sources were not easily available even within the JVT community). The BT.656 format is defined as 720x486. The effective picture size is 704, and thus the rightmost 16 pixels and the lowest 6 lines were ignored for the filtering purposes.

|

|

|

|

City |

Mobile |

Football |

Susie |

Codec

H.264/AVC High 4:2:2 profile [H264] was used. The JM 12.2 JVT reference encoder was used, set at constant quantizer settings of 28-36 (for reference slices) and 30-38 (for non-reference slices). The GOP structure was set to IBPBP... .

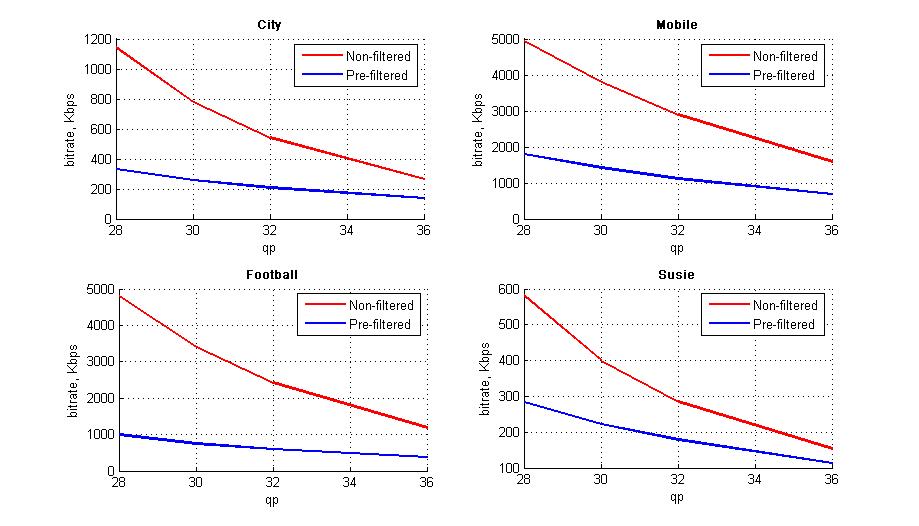

Results

Spatial prefiltering

Spatial filtering resulted in a very significant reduction of bitrate, especially at higher rates / lower quantizer values. Such an influence is unsurprising, since we are eliminating the high-frequency coefficients that would not have been eliminated when fine quantization settings are used. The resulting bitrate gain seems to be very high, though I believe it will be somewhat more modest if the encoder settings are better optimized (e.g. rate control, large high-quality motion estimation).

Temporal prefiltering

Unfortunately, while working in a satisfactory manner for low-bitrate low-quality video, the prefilteing system created distortion at moving edges. Below is a frame captured from the high-motion 'football' sequence, which illustrates the problem. Therefore, I did not include the motion-compensated temporal filter in the results. In my opinion, if better adaptivity settings are used, the system should not produce the 'bleeding' effects that can be seen now.

Conclusions

- S-CIELAB filters seem to significally reduce the bitrates at constant quantizer settings.

- The current implementation of temporal pre-filtering did not perform well; further tuning might be helpful

References

[H264] ITU-T Recommendation H.264: Advanced video coding for generic audiovisual services

[PW93] A. Poirson, B. Wandell, Appearance of colored patterns: pattern-color separability, Journal of the Optical Society of America, 10(12), 2458-2470.

[ZW97] X. Zhang, B. Wandell, A spatial extension of CIELAB for digital color image reproduction, SID Journal 1997, 5(1), pp 61-64

[TH99] X. Tong, D. Heeger, C. Van den Branden Lambrecht Video quality evaluation using ST-CIELAB,Proceedings of SPIE -- Volume 3644, May 1999, pp. 185-196

[DS84] E. Dubois, S. Sabri, Noise Reduction In Image Sequences Using Motion-Compensated Temporal Filtering T-COMM(32), pp. 826-831, 1984.

[BO92] J. Boyce, Noise reduction of image sequences using adaptative motion compensated frame averaging, IEEE ICASSP, volume 3, pp. 461-464, 1992.

[WA95] B. Wandell, Foundations of vision, Sinauer Associates, 1995

[PY03] C. Poynton, Digital Video and HDTV Algorithms and Interfaces, Morgan Kaufmann, 2003